OpenAI has unveiled o3 and o4-mini, the newest additions to its o-series lineup that the company claims are its most intelligent models to date.

According to OpenAI, a key advancement is the models’ ability to function as agents by using and combining all tools available in ChatGPT, including web search, Python data analysis, image analysis, and image generation.

The models have reportedly learned to determine independently when and how to employ various tools to solve complex problems, typically completing tasks in under a minute.

OpenAI demonstrates this capability with an energy consumption prompt example, where the model combines web search, Python analysis, chart creation, and explanation to present a comprehensive solution.

Ad

Video: OpenAI

Thinking with images

Another significant advancement is the models’ ability to integrate images directly into their internal chain of thought, “thinking” with them rather than merely “seeing” them.

This capability is enhanced by native use of image manipulation tools like zooming, cropping, or rotating directly within the reasoning process, as OpenAI explains in a blog post about visual thinking abilities.

In one example, OpenAI shows how the AI model zooms in on upside-down illegible handwriting, flips the image, and then correctly transcribes it.

According to OpenAI, the combination of improved reasoning and full tool access leads to significantly stronger performance on academic benchmarks and real-world tasks. The goal is to create a more agent-like ChatGPT that can execute tasks more independently.

Recommendation

Video: OpenAI

Setting new benchmark records

OpenAI o3, first introduced in December 2024 and refined since then, is reportedly the company’s most powerful reasoning model.

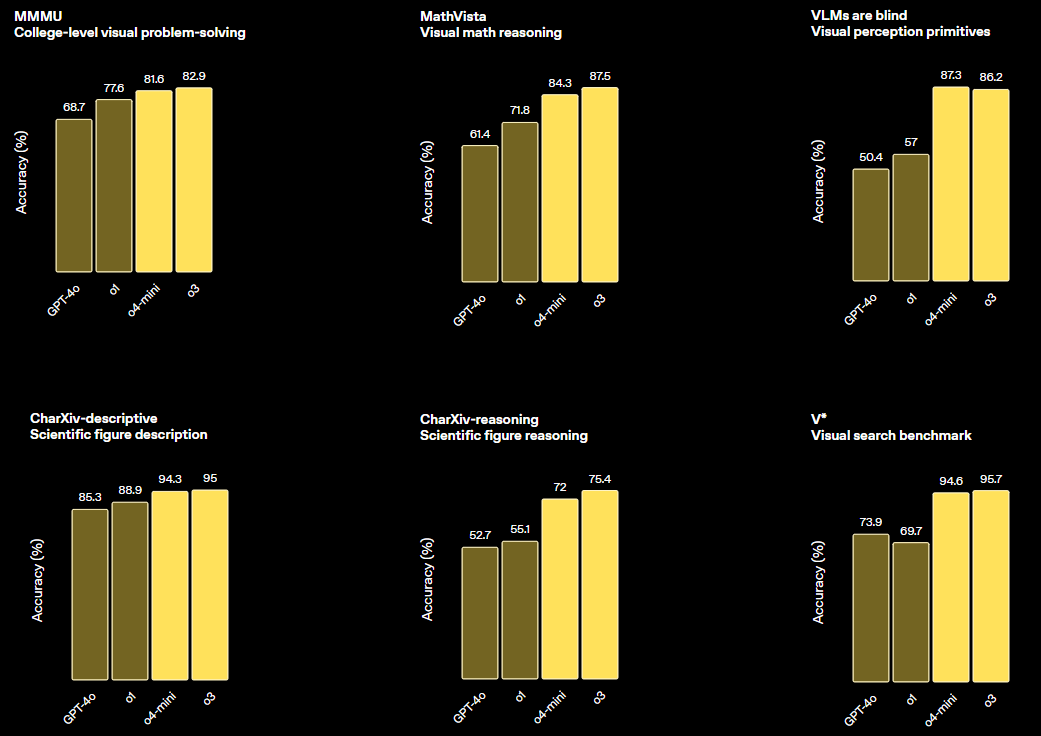

OpenAI says it demonstrates improvements in coding, mathematics, science, and visual perception, achieving new state-of-the-art (SOTA) results in benchmarks like Codeforces, SWE-bench, and MMMU.

The company claims o3 makes 20 percent fewer serious errors than its predecessor o1 on difficult real-world tasks, particularly in programming, business/consulting, and creative ideation. Early testers reportedly highlighted the model’s analytical rigor and hypothesis generation capabilities.

o4-mini is a smaller variant optimized for speed and cost efficiency that OpenAI says delivers remarkable performance for its size and price, particularly on mathematics, coding, and visual tasks.

On AIME 2025 with Python access, o4-mini achieves 99.5 percent, which OpenAI describes as approaching saturation of this benchmark. Compared to o3-mini, o4-mini also shows significant improvements in non-technical tasks and data science.

Both models supposedly follow prompts better and deliver more useful answers that can be verified through web sources. They are also designed to behave more naturally in conversation by referencing previous interactions.

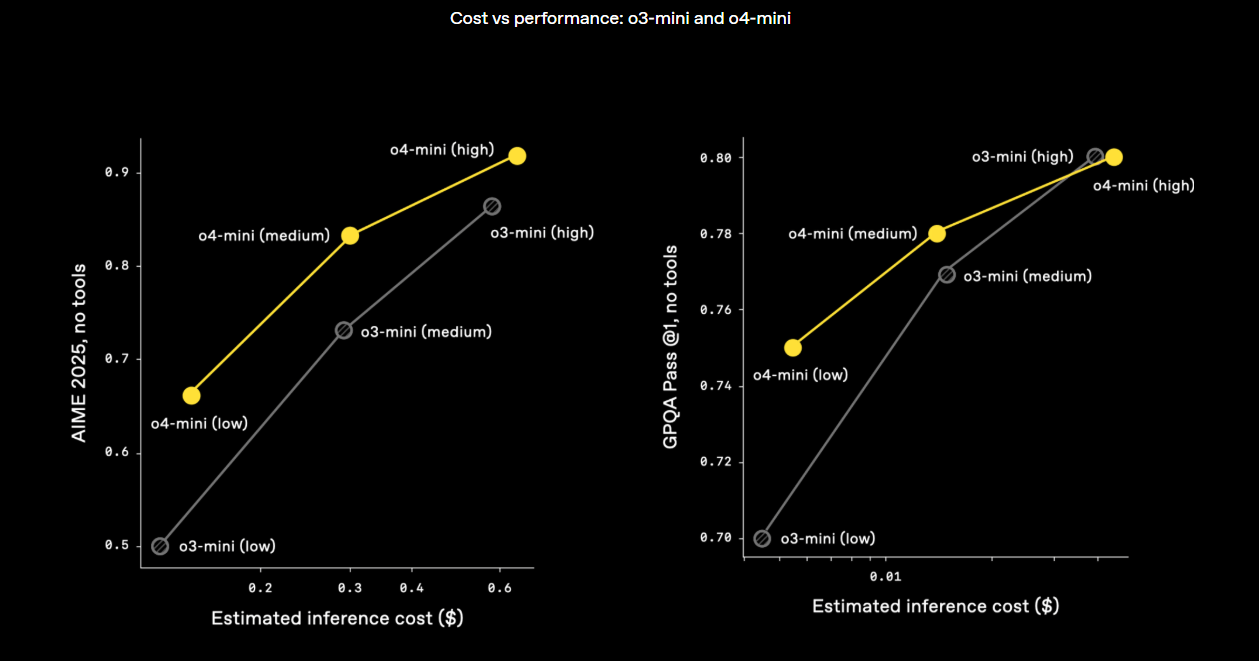

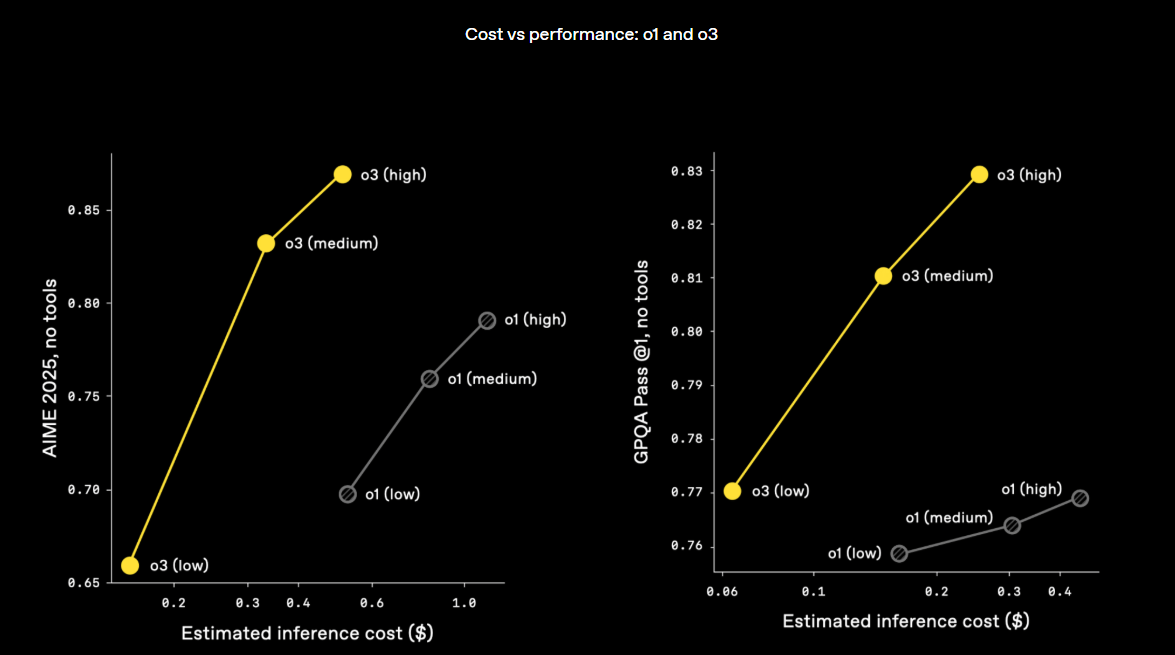

More computing power = better performance

OpenAI reports increasing computing power by an order of magnitude for reinforcement learning and inferences during the “thinking process,” and continues to see performance gains. Nevertheless, o3 and o4-mini are often not only smarter but also more cost-efficient than their predecessors o1 and o3-mini.

OpenAI feels validated in its assumption that combining reinforcement learning with longer “thinking” improves AI model performance.

Through RL, the models have also been taught when and how to use tools purposefully, enhancing their capabilities in open-ended situations, especially in visual reasoning and multi-step processes.

Codex CLI and availability

Paying ChatGPT users (Plus, Pro, Team) can now access o3, o4-mini, and o4-mini-high; Enterprise and Edu accounts will gain access soon. Free users can try o4-mini in the “Think” selection. Developers can access the models via Chat Completions API and the new Responses API. They may need to verify their organizations to gain access.

A model called o3-pro with full tool support is expected to launch in a few weeks. Future models will combine the reasoning capabilities of the o-series with the conversational and tool capabilities of the GPT series. This likely refers to GPT-5, scheduled for release this summer.

As an experiment, OpenAI is also introducing Codex CLI, a lightweight coding agent for the terminal that runs locally and utilizes the reasoning of o3/o4-mini. It enables multimodal work via the command line (screenshots, sketches) with access to local code and is available as open source on GitHub. A funding initiative of $1 million in API credits aims to support projects.

Limitations in factual knowledge and hallucinations

Despite advances in tool use and reasoning, the new models show weaknesses. In PersonQA evaluation, which tests models on questions about well-known personalities, o4-mini performs worse than o1 and o3. OpenAI attributes this to the smaller model size: “Smaller models have less world knowledge and are more prone to hallucination.”

There’s also a notable difference between o1 and o3: o3 makes more statements overall—increasing both correct and incorrect assertions. OpenAI suspects that o3’s stronger reasoning capabilities make it more likely to generate statements even when information is unclear. Whether this stems from training data, reward functions, or other factors will be examined in future studies.